Section: Research Program

Perception and Situation Awareness

Participants : Christian Laugier, Agostino Martinelli, Jilles S. Dibangoye, Anne Spalanzani, Olivier Simonin.

Robust perception in open and dynamic environments populated by human beings is an open and challenging scientific problem. Traditional perception techniques do not provide an adequate solution for these problems, mainly because such environments are uncontrolled (partially unknown and open) and exhibit strong constraints to be satisfied (in particular high dynamicity and uncertainty). This means that the proposed solutions have to simultaneously take into account characteristics such as real time processing, temporary occultations, dynamic changes or motion predictions.

Bayesian perception

Context and previous work. Perception is known to be one of the main bottleneck for robot motion autonomy, in particular when navigating in open and dynamic environments is subject to strong real-time and uncertainty constraints. Traditional object-level solutions (object recognition based on image processing) still exhibit a lack of efficiency and of robustness when operating in such complex environments. In order to overcome this difficulty, we have proposed in the scope of the former e-Motion team, a new paradigm in robotics called “Bayesian Perception”. The foundation of this approach relies on the concept of “Bayesian Occupancy Filter (BOF)” initially proposed in the PhD thesis of Christophe Coué [42] and further developed in the team [58] (The Bayesian programming formalism developed in e-Motion, pioneered (together with the contemporary work of Thrun, Burgards and Fox [94]) a systematic effort to formalize robotics problems under Probability theory –an approach that is now pervasive in Robotics.).

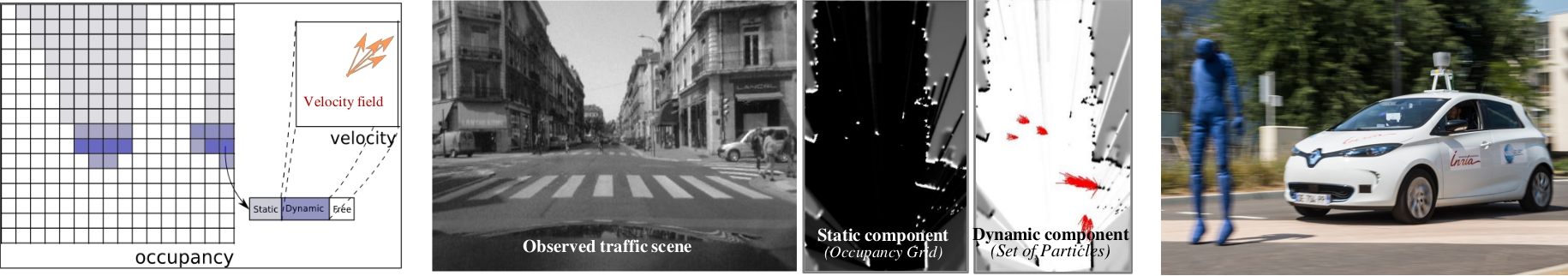

The basic idea is to combine a Bayesian filter with a probabilistic grid representation of both the space and the motions, see illustration Fig. 2. This new approach can be seen as an extension for uncertain dynamic scenes, of the initial concept of "Occupancy Grid" proposed in 1989 by Elfes (A. Elfes."Occupancy grids: A probabilistic framework for robot perception and navigation", Ph.D. dissertation, Carnegie Mellon University, Pittsburgh, USA, 1989.). It allows the filtering and the fusion of heterogeneous and uncertain sensors data, by taking into account the history of the sensors measurements, a probabilistic model of the sensors and of the uncertainty, and a dynamic model of the observed objects motions.

In the scope of the Chroma team and of several academic and industrial projects, we went on with the development and the extension under strong embedded implementation constraints, of our Bayesian Perception concept. This work has already led to the development of more powerful models and more efficient implementations, e.g. the HSBOF (Hybrid Sampling Bayesian Occupancy Filter) approach [76] and the CMCDOT (Conditional Monte Carlo Dense Occupancy Tracker) framework [84] which is still under development.

Current and future work address the extension of this model and its software implementation.

Objective — Extending the Bayesian Perception paradigm to the object level — We aim at defining a complete framework extending the Bayesian Perception paradigm to the object level. The main objective is to be simultaneously more robust, more efficient for embedded implementations, and more informative for the subsequent scene interpretation step.

We propose to integrate in a robust way higher level functions such as multiple objects detection and tracking or objects classification. The idea is to avoid well known object level detection errors and data association problems, by simultaneously reasoning at the grid level and at the object level by extracting / identifying / tracking / classifying clusters of dynamic cells (first work has been published in [84]).

Software development : new approaches for software / hardware integration The objective is to improve the efficiency of the approach (by exploiting the highly parallel characteristic of our approach), while drastically reducing important factors such as the required memory size, the size of the hardware component, its price and the required energy consumption. This work is absolutely necessary for studying embedded solutions for the future generation of mobile robots and autonomous vehicles.

Situation Awareness and Prediction

Context. Prediction of the evolution of the perceived actors is an important ability required for navigation in dynamic and uncertain environments, in particular to allow on-line safe decisions. We have recently shown that an interesting property of the Bayesian Perception approach is to generate short-term conservative (i.e. when motion parameters are supposed to be stable during a small amount of time) predictions on the likely future evolution of the observed scene, even if the sensing information is temporary incomplete or not available [76]. But in human populated environments, estimating more abstract properties (e.g. object classes, affordances, agents intentions) is also crucial to understand the future evolution of the scene.

Objective We aim to develop an integrated approach for “Situation Awareness & Risk Assessment” in complex dynamic scenes involving multiples moving agents (e.g vehicles, cyclists, pedestrians ...), whose behaviors are most of the time unknown but predictable.

Our approach relies on combining machine learning to build a model of the agent behaviors and generic motion prediction techniques (Kalman-based, GHMM [98], Gaussian Processes [92]). A strong challenge of prediction in multiple moving agents environments is to take into consideration the interactions between the different agents (traffic participants). Existing interaction-aware approaches estimate exhaustively the intent of all road users [59], assume a cooperative behavior [88], or learn the policy model of drivers using supervised learning [50]. In contrast, we adopt a planning-based motion prediction approach, which is a framework to predict human behavior [102], [56][27]. Planning-based approaches assume that humans, when they perform a task, they do so by minimizing a cost function that depends on their preferences and the context. Such a cost function can be obtained, for example, from demonstrations using Inverse Reinforcement Learning [75], [36]. This constitutes an intuitive approach and, more importantly, enables us to overcome the limitations of other approaches, namely, high complexity [59], unrealistic assumptions [88], and overfitting [50]. We have recently demonstrated the predictive potential of our approach in [26].

Towards an On-line Bayesian Decision-Making framework. The team aims at building a general framework for perception and decision-making in multi-robot/vehicle environments. The navigation will be performed under both dynamic and uncertainty constraints, with contextual information and a continuous analysis of the evolution of the probabilistic collision risk (see above). Results have recently been obtained in cooperation with Renault and Berkeley, by using the “Intention / Expectation” paradigm and Dynamic Bayesian Networks; these results have been published in [60], [61] and patented.

We are currently working on the generalization of this approach, in order to take into account the dynamics of the vehicles and multiple traffic participants. The objective is to design a new framework, allowing to overcome the shortcomings of rules-based reasoning approaches usually showing good results [64] [49], but leading to a lack of scalability and long terms predictions. Our research work is carried out through several cooperative projects (Toyota, Renault, project Prefect of IRT Nanoelec, European project ECSEL Enable-S3) and related PhD theses.

Robust state estimation (Sensor fusion)

Context. In order to safely and autonomously navigate in an unknown environment, a mobile robot is required to estimate in real time several physical quantities (e.g., position, orientation, speed). These physical quantities are often included in a common state vector and their simultaneous estimation is usually achieved by fusing the information coming from several sensors (e.g., camera, laser range finder, inertial sensors). The problem of fusing the information coming from different sensors is known as the Sensor Fusion problem and it is a fundamental problem which plays a major role in robotics.

Objective. A fundamental issue to be investigated in any sensor fusion problem is to understand whether the state is observable or not. Roughly speaking, we need to understand if the information contained in the measurements provided by all the sensors allows us to carry out the estimation of the state. If the state is not observable, we need to detect a new observable state. This is a fundamental step in order to properly define the state to be estimated. To achieve this goal, we apply standard analytic tools developed in control theory together with some new theoretical concepts we introduced in [68] (concept of continuous symmetry). Additionally, we want to account the presence of disturbances in the observability analysis.

Our approach is to introduce general analytic tools able to derive the observability properties in the nonlinear case when some of the system inputs are unknown (and act as disturbances). We recently obtained a simple analytic tool able to account the presence of unknown inputs [71], which extends a heuristic solution derived by the team of Prof. Antonio Bicchi [40] with whom we collaborate (Centro Piaggio at the University of Pisa).

Fusing visual and inertial data. A special attention is devoted to the fusion of inertial and monocular vision sensors (which have strong application for instance in UAV navigation). The problem of fusing visual and inertial data has been extensively investigated in the past. However, most of the proposed methods require a state initialization. Because of the system nonlinearities, lack of precise initialization can irreparably damage the entire estimation process. In literature, this initialization is often guessed or assumed to be known [38], [63], [46]. Recently, this sensor fusion problem has been successfully addressed by enforcing observability constraints [51], [52] and by using optimization-based approaches [62], [45], [66], [53], [74]. These optimization methods outperform filter-based algorithms in terms of accuracy due to their capability of relinearizing past states. On the other hand, the optimization process can be affected by the presence of local minima. We are therefore interested in a deterministic solution that analytically expresses the state in terms of the measurements provided by the sensors during a short time-interval.

For some years we explore deterministic solutions as presented in [69] and [70]. Our objective is to improve the approach by taking into account the biases that affect low-cost inertial sensors (both gyroscopes and accelerometers) and to exploit the power of this solution for real applications. This work is currently supported by the ANR project VIMAD (Navigation autonome des drones aériens avec la fusion des données visuelles et inertielles, lead by A. Martinelli, Chroma.) and experimented with a quadrotor UAV. We have a collaboration with Prof. Stergios Roumeliotis (the leader of the MARS lab at the University of Minnesota) and with Prof. Anastasios Mourikis from the University of California Riverside. Regarding the usage of our solution for real applications we have a collaboration with Prof. Davide Scaramuzza (the leader of the Robotics and Perception group at the University of Zurich) and with Prof. Roland Siegwart from the ETHZ.